Date created: Saturday, January 25, 2014 1:38:37 PM. Last modified: Friday, November 10, 2017 2:46:03 PM

BGP PIC Core & Edge

References:

http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/iproute_bgp/configuration/xe-3s/irg-xe-3s-book/irg-bgp-mp-pic.html

http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/iproute_bgp/configuration/xe-3s/irg-xe-3s-book/irg-best-external.html

http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/iproute_bgp/configuration/xe-3s/irg-xe-3s-book/irg-additional-paths.html

http://www.cisco.com/c/en/us/td/docs/ios/iproute_bgp/command/reference/irg_book/irg_bgp1.html

http://www.cisco.com/c/en/us/td/docs/ios/iproute_bgp/command/reference/irg_book/irg_bgp3.html

http://www.cisco.com/c/en/us/td/docs/routers/crs/software/crs_r4-2/routing/command/reference/b_routing_cr42crs/b_routing_cr42crs_chapter_01.html#wp9425093070

http://www.cisco.com/c/en/us/td/docs/routers/7600/ios/15S/configuration/guide/7600_15_0s_book/BGP.html

http://www.cisco.com/c/en/us/td/docs/ios/mpls/configuration/guide/15_0s/mp_15_0s_book/mp_vpn_pece_lnk_prot.html

http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/iproute_bgp/configuration/xe-3s/irg-xe-3s-book/bgp_diverse_path_using_a_diverse-path_route_reflector.html

BRKIPM-2265 - Deploying BGP Fast Convergence BGP-PIC

Contents:

Restrictions

BGP PIC Core

Hierarchical FIB Hardware Notes

BGP PIC Edge

BGP Advertise Best External

BGP Add-Path

BGP Local Convergence (Local Protection)

BGP Diverse Path

Follow these restrictions while using the BGP PIC feature (IOS/7600):

- The BGP PIC feature is supported with BGP multipath and non-multipath.

- In MPLS VPNs, the BGP PIC feature is not supported with MPLS VPN Inter-Autonomous Systems Option B.

- The BGP PIC feature only supports prefixes for IPv4, IPv6, VPNv4, and VPNv6 address families.

- The BGP PIC feature cannot be configured with multicast or L2VPN Virtual Routing and Forwarding (VRF) address families.

- When two PE routers become mutual or alternate paths to a CE router, the traffic might loop if the CE router fails. In such cases neither router reaches the CE router, and traffic continues to be forwarded between the two routers until the time-to-live (TTL) timer expires.

- BGP PIC is supported for the following address families:

IPv6 with native IPv6 in service provider core

IPv6 and VPNv6 with IPv4-MPLS core and 6PE and 6VPE at service provider edge routers - If you enable PIC edge, roughly twice the number of adjacency entries are used.

- When BGP PIC is configured, 2KB memory is required per prefix on RP, SP and each line card. For example, if you need to scale upto 100000 prefixes then you should ensure that atleast 200 MB is free on RP, SP and each line card.

Remember that Next-Hop-Tracking is registering call-backs for the BGP RIB watcher process based on either an IGP prefix becoming unreachable or an IGP prefix metric change. If there is a change to an IGP next hop the BGP Router process must re-calculate paths for all paths with that IGP next-hop (!Hierarchical FIB!). If summary routes are used within the IGP for core links & loopbacks of iBGP peers, this will potentially negate BGP NHT detection!

PIC Core can be enabled simply with "cef table output-chain build favor convergence-speed" and disabled with "cef table output-chain build favor memory-utilization". BGP PIC Core also requires "bgp additional-paths install" to be configured otherwise only IGP routes have backup paths calculated and installed into FIB (as the IGP usually has visibility of all the paths in the area/network, and BGP is only computing the best path). After PIC Core/BGP PIC Core is enabled the hierarchical FIB is engaged as follows:

With the hierarchical knob enabled all prefixes point to a pointer and the pointer in turn points to a next hop adjacency entry. In the case that a next-hop becomes unavailable and a backup [IGP] path is available, only the single pointer to that now unavailable next-hop needs to be updated, to point to an alternate next-hop, all prefixes pointing to that pointer will use the new next-hop using only a single CEF update. This is protecting against next-hop loss within the IGP. It has not improved BGP convergence when an eBGP path is lost for example, or if the iBGP NH address changes.

With or without the hierarchical knob enabled, the normal forwarding recursion process for iBGP learnt routes is as follows:

- iBGP learns route with next hop address

- iBGP recurses to IGP for NH reachability

- IGP recurses to an IGP NH address for the iBGP NH address

- IGP recurses to FIB for adjacency info (populated by IGP for non-directly connected next-hops) for the IGP NH address

- FIB contains layer 2 rewrite and forwarding adjacency results

CEF Recursion:

From the cisco.com notes...

Recursion is the ability to find the next longest matching path when the primary path goes down. When the BGP PIC feature is not installed, and if the next hop to a prefix fails, Cisco Express Forwarding finds the next path to reach the prefix by recursing through the FIB to find the next longest matching path to the prefix. This is useful if the next hop is multiple hops away and there is more than one way of reaching the next hop.

However, with the BGP PIC feature, you may want to disable Cisco Express Forwarding recursion for the following reasons:

- Recursion slows down convergence when Cisco Express Forwarding searches all the FIB entries.

- BGP PIC Edge already precomputes an alternate path, thus eliminating the need for Cisco Express Forwarding recursion.

When the BGP PIC functionality is enabled, Cisco Express Forwarding recursion is disabled by default for two conditions:

- For next hops learned with a /32 network mask (host routes)

- For next hops that are directly connected

For all other cases, Cisco Express Forwarding recursion is enabled. As part of the BGP PIC functionality, you can issue the "bgp recursion host" command to disable or enable Cisco Express Forwarding recursion for BGP host routes. Note: when the BGP PIC feature is enabled, by default, "bgp recursion host" is configured for VPNv4 and VPNv6 address families and disabled for IPv4 and IPv6 address families.

Hierarchical FIB Hardware Notes

The H-FIB concept is supported by most modern IOS devices and all IOS-XR devices. Cisco IOS devices like 7600s, ME3600/ME3800s, ASR920 and the ASR1000 series routers need H-FIB to be explicitly turned on with "cef table output-chain build favor convergence-speed" however, other platform do this by default CRS, XR12k ASR9k, NX-OS.

PIC-Core Support:

- 7600

- - 12.2(33)SRB: IPv4, non-ECMP

- - 12.2(33)SRC: IPv4, non-ECMP + ECMP / vpnv4, non-ECMP

- - 15.0(1)S: IPv4+vpnv4, non-ECMP and ECMP

- ASR1k: XE2.5.0

- NX-OS: 5.2

- IOS-XR: 3.4 CRS, 3.3 12k, 3.7 ASR9K

- ME3600X/ME3800X: 15.4(2)

PIC-Edge

- 7600, 7200: 12.2(33)SRE

- ME3600X/ME3800X: 15.4(2)

- ASR1k: XE3.2.0 (v4), 3.3.0(v6)

- NX-OS: Radar

- IOS-XR: Multipath: 3.5, Unipath: 3.9

The IGP convergence time should be optimised using BFD for fast peer-failure detection, SPF and LSA timer tuning for OSPF/ISIS on IOS (this is tuned by default on IOS-XR and NX-OS), next-host prefixes (like loopbacks) should be prioritised for faster SFP calculation, the IGP database should be as small as possible (no customer routes, link-nets can be removed (OSPF "prefix-suppression" for example), OSPF FRR/LFA/rLFA or MPLS-TE FRR can be leveraged.

During a failure one must plan to not have traffic sent back into the core (from a PE with a broken link to a CPE) that will be subject to an IP lookup, in this case it will likely be sent back to the same PE and create a loop. So the traffic repair path must be tunnelled (over MPLS for example). In the case of Internet in a VRF "per CE" MPLS label allocation mode is preferred (which is per next-hop) so that hundreds of thousands of routes are all updated via a single next-hop pointer (compared to per-prefix labelling mode).

Whilst PIC Core (H-FIB) is officially supported on the 7600s, they have to use packet recirculation to provide the H-FIB functionality. This is a serious hindrance for 7600: for vanilla IP forwarding this functionality is hacked together by load balancing across CEF adjacencies. For VPNv4 traffic (PIC Edge) the packets are recirculated which halves the PPS rate for VPNv4 traffic through the entire router in the case of all CFC line cards or through the individual line cards in the case of DFCs.

This is because a pseudo entry is inserted as the next-hop pointer in the prefix TCAM. Longest prefix match is used to search the prefix TCAM for incoming packets and this pseudo entry is what is returned (is stead of the next-hop adjacency pointer). This pseudo entry points to a primary next-hop adjacency (or secondary next-hop adjacency in the case of a failure). A vanilla IP packet needs to be recirculated after the longest prefix match was completed and a pseudo next-hop entry returned, to resolve either primary next-hop adjacency or secondary next-hop entry during a failure scenario. When using per-prefix labelling (which is required for PIC Edge) the PFC can match the incoming MPLS label which returns a pseudo entry, the packet is recirculated and the pseudo entry is resolved to the primary or secondary adjacency entry. If per-vrf labelling were used the incoming MPLS label would be popped which instructs the PFC which VRF to perform a lookup in, longest prefix match would occur inside that VRF table, the packet would then be recirculated and the next-hop adjacency resolved. However, if a pseudo entry was returned a third packet recirculation would be required as after the 2nd recirculation a pseudo entry would have been returned and the 7600 hardware doesn't support more than 2 packet circulations through the PFC.

PIC Edge however is supported in that it will pre calculate a backup path but it needs to be programed into hardware when a failure occurs, in the case a PE-CE link fails the number of updates required could be small so the failover speed is still fast, but not as fast as if H-FIB was enabled.

When enabling H-FIB on a 7600 the following points must be taken into consideration:

1. If you enable PIC edge, roughly twice the number of adjacency entries are used.

7606-S#show platform hardware capacity forwarding | s Adjacency

Adjacency usage: Total Used %Used

1048576 135114 13%

This would double to 270,000~ CEF adjacencies and leave just over 700,000 free.

2. When BGP PIC is configured, 2KB memory is required per prefix on RP, SP and each line card. For example, if you need to scale up to 100000 prefixes then you should ensure that at least 200 MB is free on RP, SP and each line card.

There are two parts to this point.

Firstly 2KBs of memory is required per prefix that is in FIB (having 4M prefixes in BGP RIB for example doesn’t change the memory requirement, it’s only the number in the FIB that requires that much memory overhead). Also when using LAN cards that don’t have DFCs (CFCs) all forwarding is done in the PFC so the “2KB memory is required per prefix on RP, SP and each line card” becomes “just on RP and SP”.

Secondly, one needs to calculate how much memory is currently used and if there is enough free memory. Below the example 7606-S router with RSP-720-3CXL has 587,672 IPv4 prefixes in the FIB in RAM which are using a total of 128MBs~ of memory. This would jump to 1.175GBs of memory at 2KBs per prefix.

7606-S#Show ip route summary IP routing table name is default (0x0) IP routing table maximum-paths is 32 Route Source Networks Subnets Replicates Overhead Memory (bytes) static 1 12 0 780 2340 connected 0 385 0 23260 69300 ospf 1 0 46 0 2940 8464 Intra-area: 37 Inter-area: 2 External-1: 0 External-2: 7 NSSA External-1: 0 NSSA External-2: 0 ospf 10 0 8 0 480 1472 Intra-area: 8 Inter-area: 0 External-1: 0 External-2: 0 NSSA External-1: 0 NSSA External-2: 0 bgp 65001 176591 404159 0 69559140 104535000 External: 410685 Internal: 170065 Local: 0 internal 6470 23454200 Total 183062 404610 0 69586600 128070776

This is only routes that are in the global routing table, there is no command to see memory usage for routes in VRFs so one can look into the FIB TCAM usage to see how many entries there are:

7606-S#show platform hardware capacity forwarding | s IPv4

6 72 bits (IPv4, MPLS, EoM) 983040 714127 73%

144 bits (IP mcast, IPv6) 32768 17 1%

detail: Protocol Used %Used

IPv4 637545 65%

MPLS 76578 8%

EoM 4 1%

IPv6 10 1%

IPv4 mcast 4 1%

IPv6 mcast 3 1%

Adjacency usage: Total Used %Used

1048576 135132 13%

We can estimate then that enabling the H-FIB would require 1.28GBs of memory on the SP and RP: 637,545 prefix * 2KBs = 1,275,090KBs == 1.275GBs.

Below it shows there isn’t enough space here to enable H-FIB in the RP (635~MBs free) nor SP (1.17~GBs free):

7606-S#show proc mem sorted | i Free

Processor Pool Total: 1680795756 Used: 1045921920 Free: 634873836

I/O Pool Total: 134217728 Used: 52467184 Free: 81750544

7606-S#remote command switch show proc memory sorted | i Free

Processor Pool Total: 1805602772 Used: 628985672 Free: 1176617100

I/O Pool Total: 134217728 Used: 50468720 Free: 83749008

The command “show platform hardware capacity forwarding” above is showing the usage of the fixed size FIB entries in TCAM (1M TCAM entries on this example RSP720-3CXL-10G router, with 980~k allocated for IPv4 prefixes). PIC Core is increasing the size of the FIB in RAM before it is programmed down in to the FIB space in TCAM. In RAM there will be backup prefixes and the use of pointer indirection all pre-calculated, so that the failover time is the “time to update the hardware table” [“FIB TCAM” or “CEF table”].

Remember that even without BGP add-path we can use other techniques to advertise more than one exit path to a PE. For example two RRs with route-maps changing the path between edge nodes in the routing update (possibly manipulating the IGP path) or using different route distinguishers in an MPLS VPN environment.

The "cef table output-chain build favor convergence-speed" command is a prerequisite for PIC Edge (well without it the effects are completely negated, backup routes will be installed into RIB but not FIB).

PIC Edge can be achieved by enabling BGP multipathing using "router bgp xxx; address-family xxx; maximum-paths ibgp x" - however load-sharing across the IGP to multiple iBGP PEs leads to non-deterministic harder-to-troubleshoot traffic routing so that is not the focus here. That might be required for active/active multipath scenarios however active/standby unipath scenarios are the subject here.

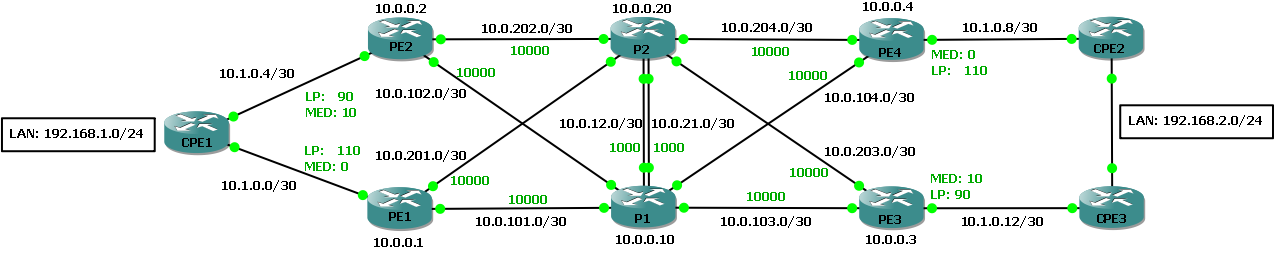

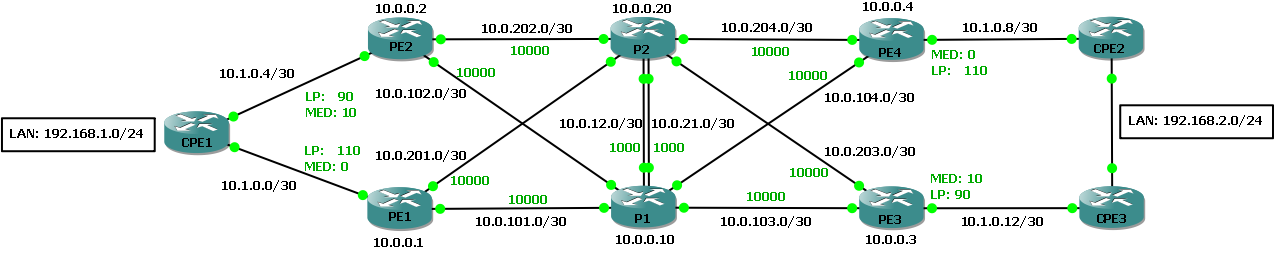

BGP advertise-best-external will allow an iBGP peer to advertise it's eBGP learnt route to a CE into the iBGP mesh, even though it has a preferred route from an existing iBGP peer. In BGP path selection law eBGP trumps iBGP however local pref trumps both of those. In the below example topology traffic to/from the first customer site through CPE1 is always preferred through via PE1 and traffic to the second customer site (using dual CPEs) is always preferred to/from CPE2 through PE4.

Best-external requires the eBGP next-hop address of the backup path to use a different external next-hop (PE1 will learn a backup from route PE2 using the next-hop address of the /30 between PE2 and CPE1):

In the case that BGP advertise-best-external is used, the iBGP routes go through the following route installation process:

- For a BGP learnt route and primary the alternate/backup path are calculated.

- BGP programs both routes via its API to the IP RIB.

- If the RIB selects a BGP route containing a backup/alternate path, it installs the backup/alternate path with the best path (RIB installs an alternate path per route if one is available).

- The RIB programs the route and includes the alternate path in its API with the FIB.

- The FIB (Cisco Express Forwarding) stores an alternate path per prefix (backup prefixes in CEF are marked with a flag). When the primary path goes down CEF searches for the backup/alternate path in a prefix independent manner (CEF also listens to BFD events to rapidly detect local failures).

When this is coupled with the hierarchical FIB table chaining command used for PIC Core, having a pre-computed backup path for eBGP routes that point to a different iBGP next-hop address means that when primary eBGP next-hop is lost, all the prefixes in the FIB that point to that that eBGP next-hop are updating by updating their shared CEF pointer to point to the backup iBGP next-hop address.

Restrictions for BGP Best-External

- The BGP Best External feature will not install a backup path if BGP Multipath is installed and a multipath exists in the BGP table. One of the multipaths automatically acts as a backup for the other paths.

- The BGP Best External feature is not supported with the following features:

MPLS VPN Carrier Supporting Carrier

MPLS VPN Inter-Autonomous Systems, option B

MPLS VPN Per Virtual Routing and Forwarding (VRF) Label - The BGP Best External feature cannot be configured with Multicast or L2VPN VRF address families.

- The BGP Best External feature cannot be configured on a route reflector, unless it is running Cisco IOS XE Release 3.4S or later.

- The BGP Best External feature does not support NSF/SSO. However, ISSU is supported if both Route Processors have the BGP Best External feature configured.

- The BGP Best External feature can only be configured on VPNv4, VPNv6, IPv4 VRF, and IPv6 VRF address families.

- When you configure the BGP Best External feature using the bgp advertise-best-external command, you need not enable the BGP PIC feature with the bgp additional-paths install command. The BGP PIC feature is automatically enabled by the BGP Best External feature.

- When you configure the BGP Best External feature, it will override the functionality of the "MPLS VPN--BGP Local Convergence" feature. However, you do not have to remove the protection local-prefixes command from the configuration.

BGP Advertise Best-External Example

In the below "normal" output without advertise best external enabled PE1 has a single route to CPE1 LAN. PE2 see's it's locally learnt route and the PE1 route reflected by RR P1, the reflected route has a higher LP and is preferred. As per iBGP rules PE2 doesn't advertise it's locally learnt less preferred route into the iBGP domain so only PE2 knows about this alternate albeit less preferred route to CPE1 LAN via the PE2-CPE1 link.

PE1#show ip route vrf CUST1-VRF1

10.0.0.0/8 is variably subnetted, 2 subnets, 2 masks

C 10.1.0.0/30 is directly connected, FastEthernet1/0.10

L 10.1.0.1/32 is directly connected, FastEthernet1/0.10

B 192.168.1.0/24 [20/0] via 10.1.0.2, 00:43:27

B 192.168.2.0/24 [200/0] via 10.0.0.4, 00:24:42

PE1#show bgp vpnv4 uni vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 2

Paths: (1 available, best #1, table CUST1-VRF1)

Advertised to update-groups:

2

Refresh Epoch 1

65001

10.1.0.2 from 10.1.0.2 (192.168.1.1)

Origin incomplete, metric 0, localpref 110, valid, external, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out 20/nolabel

rx pathid: 0, tx pathid: 0x0

P1#show bgp vpnv4 unicast all

BGP table version is 7, local router ID is 10.0.0.10

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 10.0.0.1:101

*>i 192.168.1.0 10.0.0.1 0 110 0 65001 ?

PE2#show bgp vpnv4 unicast vrf CUST1-VRF1

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 10.0.0.2:101 (default for vrf CUST1-VRF1)

*>i 192.168.1.0 10.0.0.1 0 110 0 65001 ?

* 10.1.0.6 0 90 0 65001 ?

*>i 192.168.2.0 10.0.0.4 0 110 0 65001 ?

Below, with "bgp advertise-best-external" configured under the VPNv4 address-family on PE1 and PE2, PE2 installs it's locally learnt less preferred route into RIB > FIB > CEF as a valid backup path. This is advertised as it's best external route into the iBGP domain and reflected by RR P1 to PE1 which also installs the route as a valid external backup path. This only needs to be confiugred on PE2 to provide a valid backup on PE1 however it is configured on both since they both provide connectivity to the external prefix and at some point PE2 might become the primary path.

PE2#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.2:101:192.168.1.0/24, version 13

Paths: (2 available, best #1, table CUST1-VRF1)

Advertise-best-external

Advertised to update-groups:

2

Refresh Epoch 1

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out 20/20

rx pathid: 0, tx pathid: 0x0

Refresh Epoch 1

65001

10.1.0.6 from 10.1.0.6 (192.168.1.1)

Origin incomplete, metric 0, localpref 90, valid, external, backup/repair, advertise-best-external

Extended Community: SoO:65001:1 RT:65001:101 , recursive-via-connected

mpls labels in/out 20/nolabel

rx pathid: 0, tx pathid: 0

P1#show bgp vpnv4 unicast all 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 2

Paths: (1 available, best #1, no table)

Advertised to update-groups:

2

Refresh Epoch 1

65001, (Received from a RR-client)

10.0.0.1 (metric 10001) from 10.0.0.1 (10.0.0.1)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

BGP routing table entry for 10.0.0.2:101:192.168.1.0/24, version 8

Paths: (1 available, best #1, no table)

Advertised to update-groups:

2

Refresh Epoch 1

65001, (Received from a RR-client)

10.0.0.2 (metric 10001) from 10.0.0.2 (10.0.0.2)

Origin incomplete, metric 0, localpref 90, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

PE1#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 13

Paths: (2 available, best #2, table CUST1-VRF1)

Advertise-best-external

Advertised to update-groups:

2

Refresh Epoch 1

65001, imported path from 10.0.0.2:101:192.168.1.0/24 (global)

10.0.0.2 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 90, valid, internal, backup/repair

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.2, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out 20/20

rx pathid: 0, tx pathid: 0

Refresh Epoch 1

65001

10.1.0.2 from 10.1.0.2 (192.168.1.1)

Origin incomplete, metric 0, localpref 110, valid, external, best

Extended Community: SoO:65001:1 RT:65001:101 , recursive-via-connected

mpls labels in/out 20/nolabel

rx pathid: 0, tx pathid: 0x0

PE1#show ip route vrf CUST1-VRF1 repair-paths

10.0.0.0/8 is variably subnetted, 2 subnets, 2 masks

C 10.1.0.0/30 is directly connected, FastEthernet1/0.10

L 10.1.0.1/32 is directly connected, FastEthernet1/0.10

B 192.168.1.0/24 [20/0] via 10.1.0.2, 00:41:09

[RPR][20/0] via 10.0.0.2, 00:41:09

B 192.168.2.0/24 [200/0] via 10.0.0.4, 00:41:09

PE1#show ip cef vrf CUST1-VRF1 192.168.1.0/24 detail

192.168.1.0/24, epoch 0, flags rib defined all labels

local label info: other/20

recursive via 10.1.0.2

attached to FastEthernet1/0.10

recursive via 10.0.0.2 label 20, repair

nexthop 10.0.101.1 FastEthernet0/1 label 18

nexthop 10.0.201.1 FastEthernet0/0 label 16

Still with "bgp advertise-best-external" configured, below it can be seen that PE4 is receiving both routes to CPE1 LAN via PE1 and PE2 however only the local-preference preferred route via PE1 is installed into RIB > FIB > CEF. No backup path via PE2 is installed. In the event of a PE1-CPE1 link failure or PE1 node failure PE4 (same applies to PE3) would need to walk the BGP RIB to find a new valid path and then pass it to FIB and then CEF. If there are many routes being preferred via PE1 as a next-hop that now need a new next-hop, BGPs venerable RIB walking times ensue.

PE4#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.4:101:192.168.1.0/24, version 6

Paths: (2 available, best #2, table CUST1-VRF1)

Advertised to update-groups:

1

Refresh Epoch 1

65001, imported path from 10.0.0.2:101:192.168.1.0/24 (global)

10.0.0.2 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 90, valid, internal

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.2, Cluster list: 10.0.0.10

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0

Refresh Epoch 1

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

PE4#show ip cef vrf CUST1-VRF1 192.168.1.0/24 detail

192.168.1.0/24, epoch 0, flags rib defined all labels

recursive via 10.0.0.1 label 20

nexthop 10.0.104.1 FastEthernet0/1 label 19

nexthop 10.0.204.1 FastEthernet0/0 label 20

Finally with "address-family vpnv4 unicast; bgp advertise-best-external" configured on all 4 PE routers, they all send, received and install backup paths to all CPE LAN ranges in the BGP RIB, FIB and CEF tables ("advertise-best-external" can be configured on a per-neighbor basis instead):

PE4#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.4:101:192.168.1.0/24, version 57

Paths: (2 available, best #2, table CUST1-VRF1)

Advertise-best-external

Advertised to update-groups:

1

Refresh Epoch 2

65001, imported path from 10.0.0.2:101:192.168.1.0/24 (global)

10.0.0.2 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 90, valid, internal, backup/repair

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.2, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0

Refresh Epoch 2

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

PE4#show ip route vrf CUST1-VRF1 repair-paths 192.168.1.0

Routing Table: CUST1-VRF1

Routing entry for 192.168.1.0/24

Known via "bgp 100", distance 200, metric 0

Tag 65001, type internal

Last update from 10.0.0.1 00:04:34 ago

Routing Descriptor Blocks:

* 10.0.0.1 (default), from 10.0.0.10, 00:04:34 ago, recursive-via-host

Route metric is 0, traffic share count is 1

AS Hops 1

Route tag 65001

MPLS label: 20

MPLS Flags: MPLS Required

[RPR]10.0.0.2 (default), from 10.0.0.10, 00:04:34 ago, recursive-via-host

Route metric is 0, traffic share count is 1

AS Hops 1

Route tag 65001

MPLS label: 20

MPLS Flags: MPLS Required

PE4#show ip cef vrf CUST1-VRF1 192.168.1.0/24 detail

192.168.1.0/24, epoch 0, flags rib defined all labels

recursive via 10.0.0.1 label 20

nexthop 10.0.104.1 FastEthernet0/1 label 19

nexthop 10.0.204.1 FastEthernet0/0 label 20

recursive via 10.0.0.2 label 20, repair

nexthop 10.0.104.1 FastEthernet0/1 label 18

nexthop 10.0.204.1 FastEthernet0/0 label 16

PE1#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.2.0

BGP routing table entry for 10.0.0.1:101:192.168.2.0/24, version 82

Paths: (2 available, best #1, table CUST1-VRF1)

Advertise-best-external

Advertised to update-groups:

1

Refresh Epoch 2

65001, imported path from 10.0.0.4:101:192.168.2.0/24 (global)

10.0.0.4 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:2 RT:65001:101

Originator: 10.0.0.4, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

Refresh Epoch 2

65001, imported path from 10.0.0.3:101:192.168.2.0/24 (global)

10.0.0.3 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 90, valid, internal, backup/repair

Extended Community: SoO:65001:2 RT:65001:101

Originator: 10.0.0.3, Cluster list: 10.0.0.10 , recursive-via-host

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0

It's worth noting that all 4 PEs are using their loopback0 interface IPs for the VRF RDs to make each PE originate "different" routes. "bgp advertise-best-external" advertises the eBGP path that has been less preferred over an iBGP destination and implicitly enables the installation of backup routes in to the BGP RIB. "address-family ipv4|vpnv4 unicast; bgp additional-paths install" is required for another PE to install the backup path into the RIB. In the case that PE2 wants to advertise it's backup path to PE3 & PE4 but the "advertise-best-external" behaviour is not required on these PEs, this BGP add-path install must be enabled. When configuring "advertise-best-external" the behaviour of installing received backup paths is implicit.

Per-prefix label allocation mode has full support for BGP PIC Edge. Using per-VRF allocation mode can cause a transient loop when a PE-CE link goes down until the BGP control plane has converged for the backup PE-CE link. Per-CE label mode is not support at all for BGP PIC Edge (might be coming in future IOS-XR version?).

Direct from the Cisco doc's "The advertisement of a prefix replaces the previous announcement of that prefix (this behavior is known as an implicit withdraw)...The BGP Additional Paths feature provides a way for multiple paths for the same prefix to be advertised without the new paths implicitly replacing the previous paths. Thus, path diversity is achieved instead of path hiding" - The exact same results as above (using best-external) can be achieved but with more granularity.

BGP Add-Path is a negotiated feature during BGP peer establishment for each BGP address-family activated between two peers, so it must be configured at the start of session establishment. A Path ID is assigned to each path present in a BGP NLRI, similar to a route distinguisher (RD) using in L3 VPNs however the Path ID can change per address-family and per prefix/next hop.

router bgp 65000

address-family ipv4 unicast

! IPv4 on IOS-XE (03.16.01a.S [15.5(3)S1a])

bgp additional-paths select {all | backup | best 2 | best 3 | best-external | group-best }

bgp additional-paths install

bgp additional-paths {send [receive] | receive}

! or per neighbor

neighbor x.x.x.x additional-paths {send [receive] | receive}

neighbor x.x.x.x advertise additional-paths [all] [best 2|3] [group-best]

! IPv4 on IOS (15.2(4)S4)

bgp additional-paths select {all | backup | best 2 | best 3 | best-external | group-best }

bgp additional-paths install

bgp additional-paths {send [receive] | receive}

! or per neighbor

neighbor x.x.x.x additional-paths {send [receive] | receive}

neighbor x.x.x.x advertise additional-paths [all] [best 2|3] [group-best]

exit-address-family

address-family vpnv4 unicast

! VPNv4 on IOS-XE (03.16.01a.S [15.5(3)S1a])

bgp additional-paths install

bgp additional-paths {send [receive] | receive}

! VPNv4 on IOS (15.2(4)S4)

! If both keywords best-external and backup are specified, the system will install a backup path, best-external is less preferable

bgp additional-paths select {best-external [backup] | backup}

bgp additional-paths install

exit-address-family

exit

! IOS-XR (5.3.3)

route-policy PIC

set path-selection backup 1 install [multipath-protect] [advertise]

end-policy

router bgp 65000

address-family {ipv4|vpnv4} unicast

additional-paths selection route-policy PIC

exit

exit

BGP Local Convergence (a.k.a BGP Local Protection)

BGP local protect provides a simpler functionality than PIC Edge. With PIC Edge the FIB is hierarchical and the backup paths are pre-computed, when a PE-CE link fails only the next hop pointers in the FIB need updating which allows for sub-second re-convergence for many prefixes.

With local protect the backup paths are only in the BGP RIB. When the next-hop to a prefix is lost BGP will send a withdraw message to the other PEs. Next BGP will scan the RIB for the next best path and install that into the FIB. At this point though the original MPLS label entry in the LFIB is updated such that traffic still arriving to the PE using the original label won’t be dropped, instead the same label is kept for 5 minutes but the LFIB entry is updating to point to the backup path (via another PE).

The time to detect the failure should be just as fast with local protect as it would be with PIC Edge (such as if BFD is used) however the time to restore a working backup path is longer (as BGP has to compute a new backup path) but the rest of the provider network doesn’t need to process any updates in order for the local connection to that prefix to be restored (because the local PE updates the LFIB keeping the existing label for 5 minutes until timing it out). The loss of connection time is more than with PIC but better than nothing.

Local protect cannot be configured with PIC Edge. Once PIC Edge is configured (such as with “bgp advertise-best-external”) the local protect configuration is automatically removed.

To enable local protect simple configured “protection local-prefixes” under a VRF. Below PE1 is the primary path towards CPE1 which is advertising subnet 192.168.1.0/24 to PE 1 and PE2:

PE1#show run vrf CUST1-VRF1

Building configuration...

Current configuration : 716 bytes

ip vrf CUST1-VRF1

rd 10.0.0.1:101

protection local-prefixes

route-target export 65001:101

route-target import 65001:101

PE1#show ip vrf detail CUST1-VRF1

VRF CUST1-VRF1 (VRF Id = 1); default RD 10.0.0.1:101; default VPNID

Interfaces:

Fa1/0.10

VRF Table ID = 1

Export VPN route-target communities

RT:65001:101

Import VPN route-target communities

RT:65001:101

No import route-map

No global export route-map

No export route-map

VRF label distribution protocol: not configured

VRF label allocation mode: per-prefix

Local prefix protection enabled

PE1#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 15

Paths: (2 available, best #2, table CUST1-VRF1)

Advertised to update-groups:

2

Refresh Epoch 1

65001, imported path from 10.0.0.2:101:192.168.1.0/24 (global)

10.0.0.2 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 90, valid, internal

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.2, Cluster list: 10.0.0.10

mpls labels in/out 20/20

rx pathid: 0, tx pathid: 0

Refresh Epoch 1

65001

10.1.0.2 from 10.1.0.2 (192.168.1.1)

Origin incomplete, metric 0, localpref 110, valid, external, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out 20/nolabel

rx pathid: 0, tx pathid: 0x0

PE1#show mpls forwarding-table vrf CUST1-VRF1 192.168.1.0

Local Outgoing Prefix Bytes Label Outgoing Next Hop

Label Label or Tunnel Id Switched interface

20 No Label 192.168.1.0/24[V] \

0 Fa1/0.10 10.1.0.2

PE2#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.2:101:192.168.1.0/24, version 52

Paths: (2 available, best #1, table CUST1-VRF1)

Not advertised to any peer

Refresh Epoch 2

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

Refresh Epoch 1

65001

10.1.0.6 from 10.1.0.6 (192.168.1.1)

Origin incomplete, metric 0, localpref 90, valid, external

Extended Community: SoO:65001:1 RT:65001:101

rx pathid: 0, tx pathid: 0

The PE with the broken link updates it's local LFIB to point to PE2 whilst the rest of the network converges (so the broken link was "locally protected" against, hense "local protect") to use the alternate path via PE2. Once PE2 has converged PE1 updates it's local LFIB again and now no longer provides that backup path. Since this happens very fast in GNS3 there is no example output here to show as the test topology above converges very quickly.

BGP Diverse-Path is a feature for route-reflectors. A 2nd RR becomes a shadow RR to the 1st one and advertises the next-best path (a backup path) to it's route reflector clients.

Using the same topology as before the configuration has been "reset" back to the below on all PEs and P/RR devices:

# P1/RR1 router bgp 100 bgp router-id 10.0.0.10 bgp cluster-id 10.0.0.10 bgp log-neighbor-changes no bgp default ipv4-unicast neighbor 10.0.0.1 remote-as 100 neighbor 10.0.0.1 description P1 neighbor 10.0.0.1 update-source Loopback0 neighbor 10.0.0.2 remote-as 100 neighbor 10.0.0.2 description P1 neighbor 10.0.0.2 update-source Loopback0 neighbor 10.0.0.3 remote-as 100 neighbor 10.0.0.3 description P1 neighbor 10.0.0.3 update-source Loopback0 neighbor 10.0.0.4 remote-as 100 neighbor 10.0.0.4 description P1 neighbor 10.0.0.4 update-source Loopback0 ! address-family ipv4 exit-address-family ! address-family vpnv4 neighbor 10.0.0.1 activate neighbor 10.0.0.1 send-community extended neighbor 10.0.0.1 route-reflector-client neighbor 10.0.0.2 activate neighbor 10.0.0.2 send-community extended neighbor 10.0.0.2 route-reflector-client neighbor 10.0.0.3 activate neighbor 10.0.0.3 send-community extended neighbor 10.0.0.3 route-reflector-client neighbor 10.0.0.4 activate neighbor 10.0.0.4 send-community extended neighbor 10.0.0.4 route-reflector-client exit-address-family # P2/RR2 router bgp 100 bgp router-id 10.0.0.20 bgp cluster-id 10.0.0.20 bgp log-neighbor-changes no bgp default ipv4-unicast neighbor 10.0.0.1 remote-as 100 neighbor 10.0.0.1 description P1 neighbor 10.0.0.1 update-source Loopback0 neighbor 10.0.0.2 remote-as 100 neighbor 10.0.0.2 description P1 neighbor 10.0.0.2 update-source Loopback0 neighbor 10.0.0.3 remote-as 100 neighbor 10.0.0.3 description P1 neighbor 10.0.0.3 update-source Loopback0 neighbor 10.0.0.4 remote-as 100 neighbor 10.0.0.4 description P1 neighbor 10.0.0.4 update-source Loopback0 ! address-family ipv4 exit-address-family ! address-family vpnv4 neighbor 10.0.0.1 activate neighbor 10.0.0.1 send-community extended neighbor 10.0.0.1 route-reflector-client neighbor 10.0.0.2 activate neighbor 10.0.0.2 send-community extended neighbor 10.0.0.2 route-reflector-client neighbor 10.0.0.3 activate neighbor 10.0.0.3 send-community extended neighbor 10.0.0.3 route-reflector-client neighbor 10.0.0.4 activate neighbor 10.0.0.4 send-community extended neighbor 10.0.0.4 route-reflector-client exit-address-family # All PEs are the same with just their various local details like IP and router ID changed # PE1 is the preferred next-hop towards 192.168.1.0/24 and PE4 towards 192.168.2.0/24 # PE1 router bgp 100 bgp router-id 10.0.0.1 bgp log-neighbor-changes no bgp default ipv4-unicast neighbor 10.0.0.10 remote-as 100 neighbor 10.0.0.10 description P1 neighbor 10.0.0.10 update-source Loopback0 neighbor 10.0.0.20 remote-as 100 neighbor 10.0.0.20 description P2 neighbor 10.0.0.20 update-source Loopback0 ! address-family ipv4 exit-address-family ! address-family vpnv4 neighbor 10.0.0.10 activate neighbor 10.0.0.10 send-community extended neighbor 10.0.0.10 next-hop-self neighbor 10.0.0.20 activate neighbor 10.0.0.20 send-community extended neighbor 10.0.0.20 next-hop-self exit-address-family ! address-family ipv4 vrf CUST1-VRF1 neighbor 10.1.0.2 remote-as 65001 neighbor 10.1.0.2 description CPE1 neighbor 10.1.0.2 activate neighbor 10.1.0.2 next-hop-self neighbor 10.1.0.2 as-override neighbor 10.1.0.2 soo 65001:1 neighbor 10.1.0.2 route-map CPE1-IN in neighbor 10.1.0.2 route-map CPE1-OUT out exit-address-family

As is expected, only PE2 has two paths to the CPE1 prefix 192.168.1.0/24, and for all PEs the preferred path is via PE1:

PE1#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 2

Paths: (1 available, best #1, table CUST1-VRF1)

Advertised to update-groups:

1

Refresh Epoch 1

65001

10.1.0.2 from 10.1.0.2 (192.168.1.1)

Origin incomplete, metric 0, localpref 110, valid, external, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out 20/nolabel

rx pathid: 0, tx pathid: 0x0

PE2#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.2:101:192.168.1.0/24, version 4

Paths: (2 available, best #1, table CUST1-VRF1)

Not advertised to any peer

Refresh Epoch 1

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

Refresh Epoch 1

65001

10.1.0.6 from 10.1.0.6 (192.168.1.1)

Origin incomplete, metric 0, localpref 90, valid, external

Extended Community: SoO:65001:1 RT:65001:101

rx pathid: 0, tx pathid: 0

PE3#show bgp vpnv4 unicast vrf CUST1-VRF1 192.168.1.0

BGP routing table entry for 10.0.0.3:101:192.168.1.0/24, version 6

Paths: (1 available, best #1, table CUST1-VRF1)

Advertised to update-groups:

1

Refresh Epoch 1

65001, imported path from 10.0.0.1:101:192.168.1.0/24 (global)

10.0.0.1 (metric 20001) from 10.0.0.10 (10.0.0.10)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

Originator: 10.0.0.1, Cluster list: 10.0.0.10

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

P2#show bgp vpnv4 unicast all 192.168.1.0

BGP routing table entry for 10.0.0.1:101:192.168.1.0/24, version 2

Paths: (1 available, best #1, no table)

Advertised to update-groups:

1

Refresh Epoch 1

65001, (Received from a RR-client)

10.0.0.1 (metric 10001) from 10.0.0.1 (10.0.0.1)

Origin incomplete, metric 0, localpref 110, valid, internal, best

Extended Community: SoO:65001:1 RT:65001:101

mpls labels in/out nolabel/20

rx pathid: 0, tx pathid: 0x0

The below configuration is applied to P2 only

router bgp 100 address-family vpnv4 unicast maximum-paths 2 bgp bestpath igp-metric ignore bgp additional-paths select backup bgp additional-paths install neighbor 10.0.0.3 advertise diverse-path backup

Previous page: BGP Dampening

Next page: BGP PIC Limitations